Introduction

This post is part 3 of a 3 part series.

- Serverless Patterns and Best Practices for AWS - Part 1 Design

- Serverless Patterns and Best Practices for AWS - Part 2 Build

- Serverless Patterns and Best Practices for AWS - Part 3 Operate (this)

In the previous blogs of this series we covered design and build aspects of building your serverless application, in this one we will try to delve into the deployment and monitoring issues that you will need to consider.

Once you have figured out all the details about your function’s structure and frameworks you can start coding, right? Wrong. There are a few questions that you need ask yourself about your Lambda environment before jumping into your code editor, these things are easier to change now than after you go-live.

Where should I hide my secrets?

Secrets are tricky because they are not your normal configuration parameters that you can commit with your code, they are supposed to be, well, secrets. You can store them encrypted with your app configuration; however, you would then have to decrypt them on deployment and then manage that process in your CI/CD.

AWS recommends Secrets Manager for your passwords etc. and provides things like password generation and rotation. If you don't have a secret management strategy already defined. Your Lambda functions need permissions to read and decrypt the secrets, so be sure to add the appropriate policies. These secrets will be encrypted by KMS and stored in Secrets Manager via your CI/CD.

An alternative to Secrets Manager is to store your secrets as SecureString in Parameter store, along with other parameters. Parameter Store also allows encryption with KMS but doesn't provide all the goodies like password generation and rotation. However, it also doesn't come with that other extra feature of Secrets Manager - costs.

How do I manage my configuration parameters?

If you have answered the previous question, then you have answered this one too. As your application configuration is like secrets but not as secretive as them. You can choose to store all your application configuration parameters in your serverless config file or you can use AWS Systems Manager (SSM) Parameter Store. Where to draw the line? As usual it depends on a handful of things. For global parameters e.g. DB URLs, it is recommended to use SSM as it acts as a centralised location, which many of your deployed functions can use to get the latest values. On the other hand, if the parameter is very specific to your function you can just store it in the serverless config file.

Now you can either make an API call to SSM to retrieve the parameter within your code or add environmental variables to your function then use serverless to retrieve them for you upon deployment. We reference the parameter in the serverless file when it isn't going to change that often, maybe once per deployment, the reason is you do not want to redeploy your function whenever the parameter changes. On the contrary, if the parameter changes quite frequently then it is better to retrieve the parameter using the SSM APIs as part of your code.

How can I version my Lambdas?

Another important question, how do you know which version of your function is active and how can you choose which version to consume? This is also important for your green/blue deployment pattern, which version is green, and which is blue?

AWS provides an easy way to tag your functions, they call them aliases, which is another way to assign version number to your deployed function, you can use it to make sure your services are referring to the right version. With aliases you assign an alias a version of your choosing, you can use this technique to assign a specific alias to the version that has been tested and is stable and all your services should refer to that alias when calling your functions. You can also use aliases as deployment environment abstraction, a "dev" alias refer to the version which is not yet ready to be deployed to "test" for example, you can then reassign the "test" alias after your function passes a set of automated testing, you can use the same concept for your blue/green deployments.

What are cold starts?

This is a known problem in the Lambda function world. The first request to your Lambda will pay the cost of initialising your Lambda container, all subsequent requests will reuse the existing containers long as it has not been recycled. Make sure you are familiar with the Lambda execution context. This can be a problem especially if you are building APIs and your function in part of your request chain where response time is critical to the API consumers. The problem gets even worse if you deploy your function within a VPC as the container need to create an ENI in your VPC which adds more time to the initialisation (AWS has announced a huge improvement to the way they initialise Lambdas in VPCs). You can get around this by calling your function periodically to keep your functions warm. There is a serverless plugin that can do that for you by deploying another Lambda that looks after your Lambdas and keeps them warm. AWS has recently announced a concurrency configuration for Lambdas that can also help to address this issue.

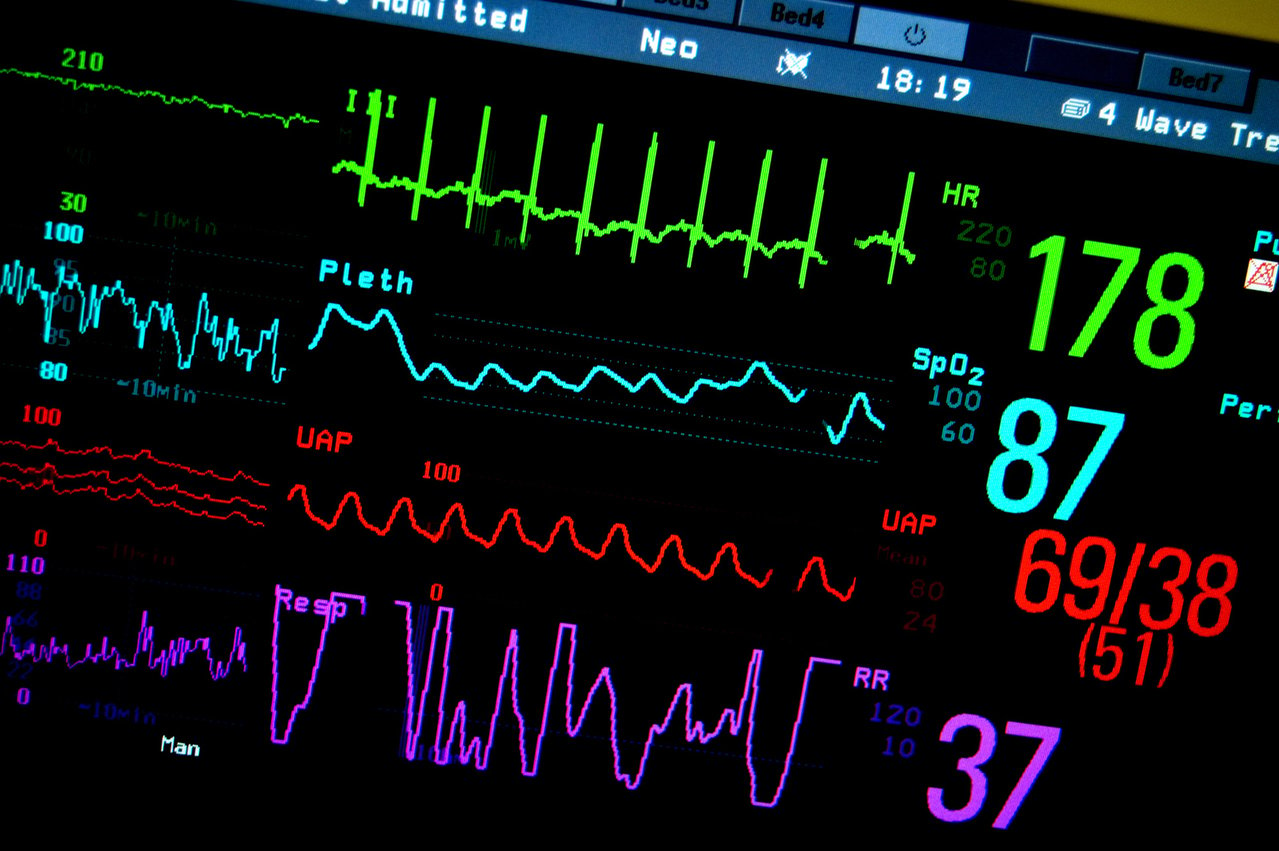

Why should I care about monitoring?

Monitoring your function in action is very important as it provides the real-life data that you measure your design against, are your functions fast enough? What are the bottlenecks in my request journey and how can I remove them? Things that only real load can uncover. That is why you need to keep an eye on what is happening in your production environment. You also need to setup alerts to notify you whenever something goes wrong. There are many tools out there that support Lambda functions monitoring, in most cases you are going to be leveraging the incumbent monitoring solution. You can also check out AWS service map as part of X-Ray service, there you can view an overall visual representation of your functions. If you want a more detailed monitoring solution that gives you additional levels of information plus many further integration options, you can try a third-party monitoring solution.

What should I keep an eye on?

There is an overgrowing list of metrics that AWS provides for Lambda functions, so, which of these should you focus on and which ones you should use for your alerts? In order to answer these questions, let us first categorise these metrics into two main levels:

- Account level: these are metrics related to the whole AWS account e.g.: number of running functions at a given time

- Function level: these are metrics related to a certain function e.g.: number of failed instances

While the account level metrics/alerts may not be your responsibility, assuming someone else is managing the AWS account for you, the function level ones are yours. You need to know when your function fails or when it’s taking longer than expected. One of the important metrics is the average number of concurrent instances of Lambda functions running over a period of time. There is a soft limit of 1000 per account that when reached your Lambda functions will fail to start. Therefore, you can setup an alert when the "ConcurrentExecutions" exceed a reasonable threshold e.g.: 900.

Some other metrics worth considering are:

- Duration (milliseconds): used to alert when Lambda execution time reaches 80% of the configured timeout, you can investigate whether you need to increase the timeout value or update your function code.

- Errors (%): you can setup an alert when this percentage is above the acceptable SLA e.g: 10%

What is the right memory for my function?

Sometimes your function is a general purpose one and performance is not a critical factor, other times it is part of an API call that has to meet a certain SLA, which is measured in milliseconds. Many things can affect your function performance e.g.: the language you choose can increase the time it takes to warm up your Lambda's container, so choose wisely. Setting the right memory size is another factor that may slow down your function, there is a very handy tool that can help you decide what is the correct memory size for your Lambda function. I recommend you incorporate it into you CICD.

Summary

That's it folks! We tried to cover some important questions that you need to ask yourself before you start your serverless journey. When we started ours, we found a lot of articles out there that covered many of these questions in more detail. However, we want our articles to be a starting point for you that covers the most important aspects of a serverless application. You will definitely face other problems and issues that are not covered here, if you believe that we missed an important topic please let us know.